Optimize Cloud Server Performance: A Comprehensive Guide to Enhanced Efficiency

As Cloud server performance optimization techniques take center stage, this opening passage beckons readers into a world crafted with expertise, ensuring a reading experience that is both absorbing and distinctly original. Delving into the intricacies of cloud server optimization, this guide unveils a treasure trove of strategies and techniques to elevate performance and maximize efficiency.

With a focus on practical implementation and real-world applications, this guide empowers readers to harness the full potential of their cloud servers, unlocking a new realm of possibilities and driving business success.

Resource Utilization Monitoring and Optimization

Maximizing the performance of cloud servers requires continuous monitoring and optimization of resource utilization. This involves tracking and analyzing metrics related to CPU, memory, and network usage to identify potential bottlenecks and underutilized resources.

Effective resource utilization monitoring can be achieved through the use of various tools and techniques. These include:

- Cloud monitoring services:These services provide real-time monitoring and alerting capabilities for key metrics, enabling administrators to quickly identify and address issues.

- Performance monitoring tools:These tools allow for detailed analysis of resource usage patterns, helping to identify trends and potential performance issues.

- Log analysis:Analyzing server logs can provide insights into resource usage patterns and help identify areas for improvement.

Optimizing Resource Allocation

Once resource utilization data has been collected, it is important to optimize resource allocation to ensure that applications and services are running efficiently. This involves:

- Rightsizing instances:Selecting the appropriate instance size for the workload can help avoid overprovisioning and underprovisioning, optimizing cost and performance.

- Vertical scaling:Increasing the resources allocated to an instance (e.g., CPU, memory) can improve performance for demanding workloads.

- Horizontal scaling:Adding more instances to distribute the load can improve scalability and performance.

Reducing Bottlenecks

Identifying and eliminating bottlenecks is crucial for optimizing cloud server performance. Common bottlenecks include:

- CPU bottlenecks:High CPU utilization can lead to slow performance and increased latency. Optimizing code, reducing unnecessary processing, and scaling up instances can help alleviate CPU bottlenecks.

- Memory bottlenecks:Insufficient memory can cause performance degradation and crashes. Increasing memory allocation, optimizing memory usage, and using caching techniques can help resolve memory bottlenecks.

- Network bottlenecks:Slow network connectivity can impact application performance. Optimizing network configurations, using content delivery networks (CDNs), and increasing network bandwidth can help reduce network bottlenecks.

Capacity Planning and Forecasting

Effective capacity planning and forecasting are essential for ensuring that cloud servers have sufficient resources to meet future demand. This involves:

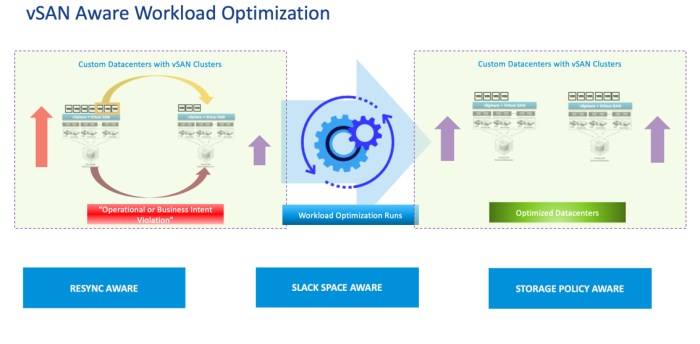

- Workload analysis:Understanding the characteristics and growth patterns of the workload can help determine future resource requirements.

- Trend analysis:Analyzing historical resource usage data can help identify trends and predict future demand.

- Capacity planning tools:Using tools that simulate workload and resource usage can help forecast future capacity needs.

Application Architecture and Design

Designing cloud-native applications for optimal performance requires a focus on scalability, resilience, and cost-effectiveness. Cloud-native architectures embrace modern technologies such as microservices, containers, and serverless computing to achieve these goals.

Microservices

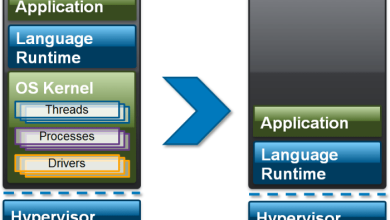

Microservices decompose monolithic applications into smaller, independent services. Each microservice performs a specific function, enabling greater flexibility, scalability, and maintainability. By breaking down complex applications into smaller components, developers can iterate and deploy changes more quickly and efficiently.

Containerization

Containerization packages applications and their dependencies into lightweight, portable containers. This isolation allows applications to run consistently across different environments, including on-premises, cloud, and hybrid deployments. Containerization simplifies application management, reduces infrastructure costs, and improves application portability.

Serverless Architectures

Serverless architectures eliminate the need for managing servers, allowing developers to focus solely on writing code. Cloud providers handle server provisioning, scaling, and maintenance, freeing up resources and reducing operational costs. Serverless architectures enable developers to build highly scalable and responsive applications without the overhead of managing infrastructure.

Database Optimization and Latency Reduction

Optimizing database performance is crucial for reducing latency and improving application responsiveness. Cloud-based databases offer various features for optimization, including automated indexing, data partitioning, and caching mechanisms. Additionally, techniques such as query optimization and reducing database calls can further enhance performance and reduce latency.

Caching and Content Delivery: Cloud Server Performance Optimization Techniques

Caching and content delivery are critical techniques for optimizing cloud server performance. Caching mechanisms reduce server load by storing frequently requested data in memory or on disk, while content delivery networks (CDNs) distribute content across multiple servers to improve response times and reduce latency.

Implementing Caching Mechanisms, Cloud server performance optimization techniques

- In-memory caching:Stores frequently accessed data in memory for lightning-fast retrieval.

- Disk caching:Stores data on disk for less frequently accessed data, providing a balance between speed and storage capacity.

- Object caching:Caches entire objects, such as images or documents, to avoid the need to retrieve them from the database.

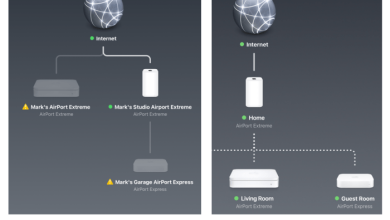

Content Delivery Networks (CDNs)

CDNs consist of a network of servers distributed across different geographic locations. When a user requests content, the CDN delivers it from the server closest to their location, reducing latency and improving user experience.

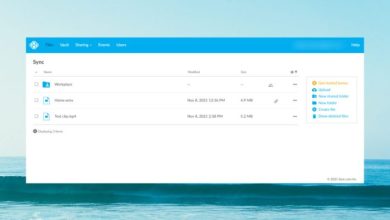

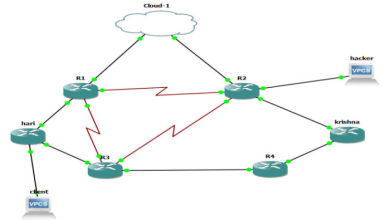

Cloud server performance optimization techniques include employing Cloud diagram server best practices to optimize resource allocation and enhance overall efficiency. By implementing these best practices, organizations can ensure that their cloud servers operate at peak performance, delivering a seamless and responsive user experience.

- CDN configuration:Configure the CDN to cache static content, such as images and CSS files, and set appropriate cache expiration times.

- CDN monitoring:Monitor CDN performance to identify any bottlenecks or areas for improvement.

Cache Invalidation and Minimizing Cache Misses

Cache invalidation ensures that cached data is up-to-date when the original data changes. Cache misses occur when requested data is not found in the cache, leading to increased server load.

- Cache invalidation strategies:Implement strategies like timestamp-based invalidation or dependency tracking to keep cached data fresh.

- Minimizing cache misses:Use techniques like cache preloading and cache warming to populate the cache with frequently accessed data before user requests.

Load Balancing and Autoscaling

Cloud servers provide scalable, cost-efficient solutions for handling varying workloads. Load balancing and autoscaling techniques are crucial for optimizing performance and ensuring application availability during peak traffic or sudden load increases.

Cloud server performance optimization techniques involve optimizing resources, reducing latency, and enhancing scalability. By integrating Cloud diagram server implementation , businesses can visualize and optimize their cloud infrastructure, ensuring efficient resource allocation and improved performance. These optimization techniques empower businesses to deliver seamless and responsive applications to their users.

Load Balancing Techniques

- Round-robin:Distributes traffic evenly across servers.

- Weighted round-robin:Assigns more traffic to servers with higher capacity.

- Least connections:Directs traffic to servers with the fewest active connections.

- Least response time:Routes traffic to servers with the lowest response times.

- IP hashing:Assigns traffic to a specific server based on the client’s IP address.

Autoscaling Policies

Autoscaling policies define rules for automatically adjusting the number of servers based on workload demand. They can be:

- Reactive:Scales up or down based on current metrics (e.g., CPU utilization).

- Predictive:Anticipates future demand based on historical data and trends.

- Manual:Allows manual scaling adjustments.

Managed Load Balancing Services

Managed load balancing services provide pre-configured and managed load balancers that simplify setup and maintenance. They offer:

- Automated failover:Ensures uninterrupted service during server failures.

- Global reach:Distributes traffic across multiple regions for improved latency.

- SSL offloading:Improves performance by handling SSL encryption on the load balancer.

Security and Performance

Securing cloud servers while maintaining optimal performance requires a delicate balance. This section explores security measures to protect cloud servers from vulnerabilities, examines how security configurations impact performance, and provides strategies for mitigating the impact. Additionally, it discusses best practices for implementing security monitoring and intrusion detection systems.

Security Measures to Protect Cloud Servers

- Implement firewalls to restrict access to authorized traffic.

- Enable intrusion detection and prevention systems (IDS/IPS) to monitor and block malicious activity.

- Use strong encryption algorithms to protect sensitive data.

- Regularly update software and security patches to address vulnerabilities.

- Implement access control mechanisms such as role-based access control (RBAC) to limit user privileges.

Impact of Security Configurations on Performance

Security configurations can impact performance by adding overhead to network traffic and increasing resource consumption. However, by optimizing security configurations and implementing performance-aware security measures, it is possible to mitigate the impact on performance.

Strategies for Mitigating the Impact

- Use lightweight security tools and configurations that minimize overhead.

- Implement security measures at the application level rather than at the network level to reduce latency.

- Regularly monitor performance metrics and adjust security configurations as needed to maintain optimal performance.

Security Monitoring and Intrusion Detection

Effective security monitoring and intrusion detection are crucial for protecting cloud servers. Implementing security monitoring tools and intrusion detection systems (IDS) allows for real-time detection and response to security threats. IDS can be configured to monitor network traffic, system logs, and file integrity to identify and block malicious activity.

Closing Summary

In conclusion, optimizing cloud server performance is an ongoing journey that requires a holistic approach, encompassing resource management, application design, caching strategies, load balancing, security measures, and continuous monitoring. By embracing the techniques Artikeld in this guide, organizations can transform their cloud infrastructure into a well-oiled machine, ensuring optimal performance, enhanced reliability, and unwavering resilience.