Cloud Diagram Server Performance: Optimizing for Peak Efficiency

Cloud diagram server performance is crucial for businesses that rely on cloud-based applications and services. By understanding the factors that affect server performance and implementing effective optimization techniques, organizations can ensure that their cloud infrastructure delivers optimal performance and a seamless user experience.

This comprehensive guide delves into the key aspects of cloud diagram server performance, providing insights into server resource utilization, network performance, application performance monitoring, cloud infrastructure design, and performance optimization techniques.

Server Resource Utilization

Server resource utilization refers to the degree to which the hardware and software resources of a server are being used. Monitoring and analyzing server resource utilization is crucial for ensuring optimal performance and preventing system failures.

CPU Utilization

- CPU utilization measures the percentage of time the server’s central processing unit (CPU) is actively processing instructions.

- High CPU utilization can lead to slow response times, performance degradation, and system crashes.

- Monitoring CPU utilization can help identify bottlenecks and optimize resource allocation.

Memory Utilization

- Memory utilization measures the amount of physical memory (RAM) being used by the server.

- High memory utilization can cause the server to swap data to disk, which significantly impacts performance.

- Monitoring memory utilization can help identify memory leaks and optimize memory usage.

Disk Utilization

- Disk utilization measures the amount of storage space being used on the server’s hard disk drives (HDDs) or solid-state drives (SSDs).

- High disk utilization can lead to slow file access, performance bottlenecks, and data loss.

- Monitoring disk utilization can help identify storage capacity issues and optimize storage usage.

Network Utilization

- Network utilization measures the amount of bandwidth being used by the server’s network interface card (NIC).

- High network utilization can cause network congestion, slow network access, and application performance issues.

- Monitoring network utilization can help identify network bottlenecks and optimize network resource allocation.

Network Performance

Network performance is a critical factor in ensuring optimal server performance. It involves the efficiency and reliability of data transmission between the server and its clients or other connected devices.

Various factors influence network performance, including bandwidth, latency, packet loss, and network congestion.

Bandwidth

Bandwidth refers to the amount of data that can be transmitted over a network connection within a given time frame. It is typically measured in bits per second (bps) or megabits per second (Mbps). Higher bandwidth allows for faster data transfer rates, reducing load times and improving overall responsiveness.

Latency

Latency, also known as network delay, is the time it takes for data to travel from one point to another on the network. It is often measured in milliseconds (ms). High latency can result in slow response times and can significantly impact user experience, especially in real-time applications.

Packet Loss

Packet loss occurs when some data packets fail to reach their destination during transmission. This can be caused by network congestion, faulty equipment, or other network issues. Packet loss can lead to data corruption, application errors, and reduced overall performance.

Network Congestion

Network congestion occurs when the volume of data traffic exceeds the capacity of the network. This can result in slower data transfer rates, increased latency, and packet loss. Network congestion can be caused by high traffic volumes, insufficient bandwidth, or network bottlenecks.

Testing and Analyzing Network Performance

Regularly testing and analyzing network performance is essential for identifying and addressing any potential issues. Several tools and techniques can be used for this purpose, including:

- Ping: Measures latency and packet loss by sending a series of data packets to a specific destination.

- Traceroute: Traces the path of data packets from the source to the destination, identifying any bottlenecks or high-latency points.

- Bandwidth testing tools: Measure the actual bandwidth available on a network connection.

Impact of Network Latency and Packet Loss on Server Performance

High network latency and packet loss can significantly impact server performance. Latency can cause delays in processing requests and responding to clients, leading to slow response times and poor user experience. Packet loss can result in data corruption and application errors, which can disrupt server operations and affect data integrity.

Application Performance Monitoring

Application performance monitoring (APM) involves techniques used to monitor the performance of applications, including web applications, mobile apps, and desktop applications. APM provides insights into how applications are performing, helps identify and troubleshoot performance issues, and enables developers and IT professionals to optimize application performance for end-users.

Tools and Techniques for Analyzing Application Performance

Various tools and techniques are used for analyzing application performance, including:

- Synthetic monitoring: This involves simulating user actions and interactions with an application to measure performance metrics such as response times and page load times.

- Real user monitoring (RUM): This involves collecting data from actual user interactions with an application to provide insights into real-world performance.

- Code profiling: This involves analyzing application code to identify performance bottlenecks and optimize code for better performance.

- Log analysis: This involves analyzing application logs to identify performance issues and errors.

Impact of Application Performance on User Experience

Application performance has a significant impact on user experience. Slow or unresponsive applications can lead to frustration, decreased productivity, and abandonment. On the other hand, well-performing applications provide a positive user experience, increase engagement, and drive business success.

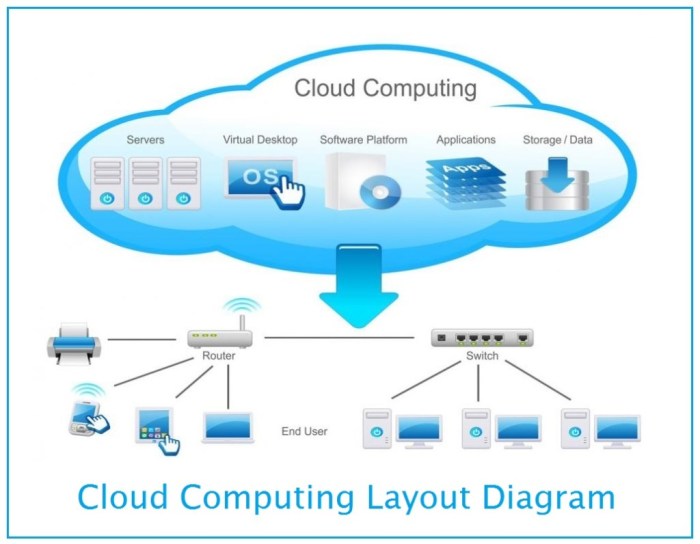

Cloud Infrastructure Design: Cloud Diagram Server Performance

Cloud infrastructure design plays a crucial role in ensuring optimal server performance. By understanding different design patterns and their trade-offs, organizations can create cloud infrastructures that meet their specific performance requirements.

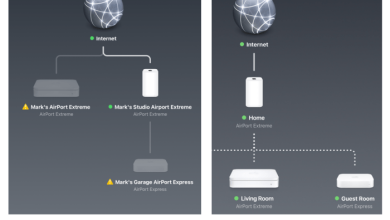

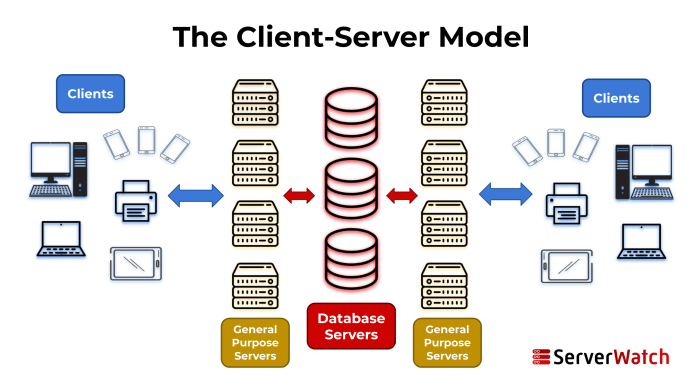

One common design pattern is the -*multi-tier architecture, which separates the application into distinct layers, such as presentation, application, and database. This approach improves scalability and performance by isolating each layer and allowing for independent scaling.

Another pattern is the -*microservices architecture, which decomposes the application into smaller, independent services. This approach promotes agility and fault tolerance by enabling individual services to be deployed, scaled, and updated without affecting the entire application.

The choice between these design patterns depends on factors such as the application’s complexity, scalability requirements, and fault tolerance needs.

Infrastructure Components

Cloud infrastructure design also involves selecting and configuring specific components, such as:

- Virtual machines (VMs):VMs provide a flexible and scalable way to run applications in the cloud. Organizations can choose from a variety of VM sizes and configurations to meet their performance needs.

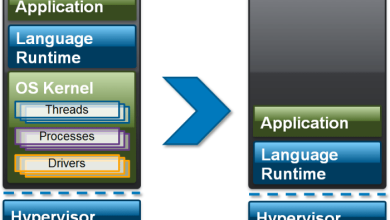

- Containers:Containers are lightweight and portable runtime environments that isolate applications from the underlying infrastructure. Containers can improve performance by reducing resource overhead and enabling faster deployment.

- Load balancers:Load balancers distribute traffic across multiple servers, ensuring high availability and performance. Organizations can choose from various load balancing algorithms to optimize traffic flow.

Performance Optimization

To optimize performance, cloud infrastructure design should consider the following best practices:

- Provisioning resources appropriately:Right-sizing VMs and containers to match application needs can prevent performance bottlenecks.

- Monitoring and scaling:Continuously monitoring infrastructure metrics and scaling resources based on demand can ensure optimal performance under varying loads.

- Optimizing network configuration:Configuring network settings, such as routing and security groups, can improve network performance and reduce latency.

Performance Optimization Techniques

Performance optimization techniques aim to improve the efficiency and responsiveness of cloud servers. By implementing these techniques, organizations can enhance the overall performance of their applications and services.

Server Configuration Optimization

Server configuration optimization involves adjusting various settings and parameters within the server’s operating system and software to improve performance. This can include optimizing memory allocation, CPU scheduling, and network settings. By fine-tuning these configurations, organizations can ensure that the server is utilizing its resources efficiently.

Code Optimization

Code optimization focuses on improving the efficiency of the application code running on the server. This involves identifying and eliminating performance bottlenecks within the code, such as inefficient algorithms or excessive database queries. By optimizing the code, organizations can reduce the processing time and improve the overall responsiveness of the application.

Caching, Cloud diagram server performance

Caching involves storing frequently accessed data in a temporary memory location, such as a cache memory or a database cache. By doing so, the server can quickly retrieve the data from the cache instead of having to access the slower storage devices.

This technique significantly improves the performance of applications that require frequent access to the same data.

Load Balancing

Load balancing distributes the incoming traffic across multiple servers to prevent overloading any single server. This technique helps ensure that the servers are utilized evenly, preventing performance degradation due to excessive load on a single server. Load balancing can be implemented using various methods, such as round-robin DNS or hardware load balancers.

Monitoring and Analytics

Monitoring and analytics involve continuously collecting and analyzing data related to server performance. This data can include metrics such as CPU utilization, memory usage, and network traffic. By monitoring these metrics, organizations can identify performance issues early on and take proactive measures to address them.

Analytics tools can provide insights into the root causes of performance problems and help optimize the server configuration and application code.

Conclusion

In conclusion, cloud diagram server performance is a multifaceted topic that requires a holistic approach to optimization. By monitoring and analyzing server resource utilization, network performance, and application performance, organizations can identify bottlenecks and implement targeted optimization techniques. This comprehensive guide has provided a roadmap for achieving optimal cloud server performance, enabling businesses to maximize the benefits of cloud computing and deliver exceptional user experiences.