Cloud Server Performance: Unveiling Differences Across Providers

Embark on a comprehensive exploration of Cloud server performance with different cloud providers. Dive into the intricacies of infrastructure comparisons, benchmarking methodologies, optimization techniques, and real-world case studies. Uncover the factors shaping performance variations and discover best practices for maximizing efficiency in your cloud deployments.

Through rigorous analysis and expert insights, we unravel the secrets to unlocking optimal cloud server performance, empowering you to make informed decisions for your business.

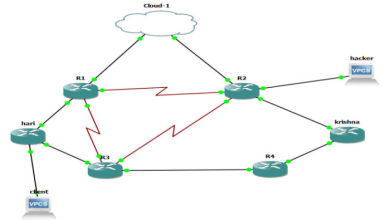

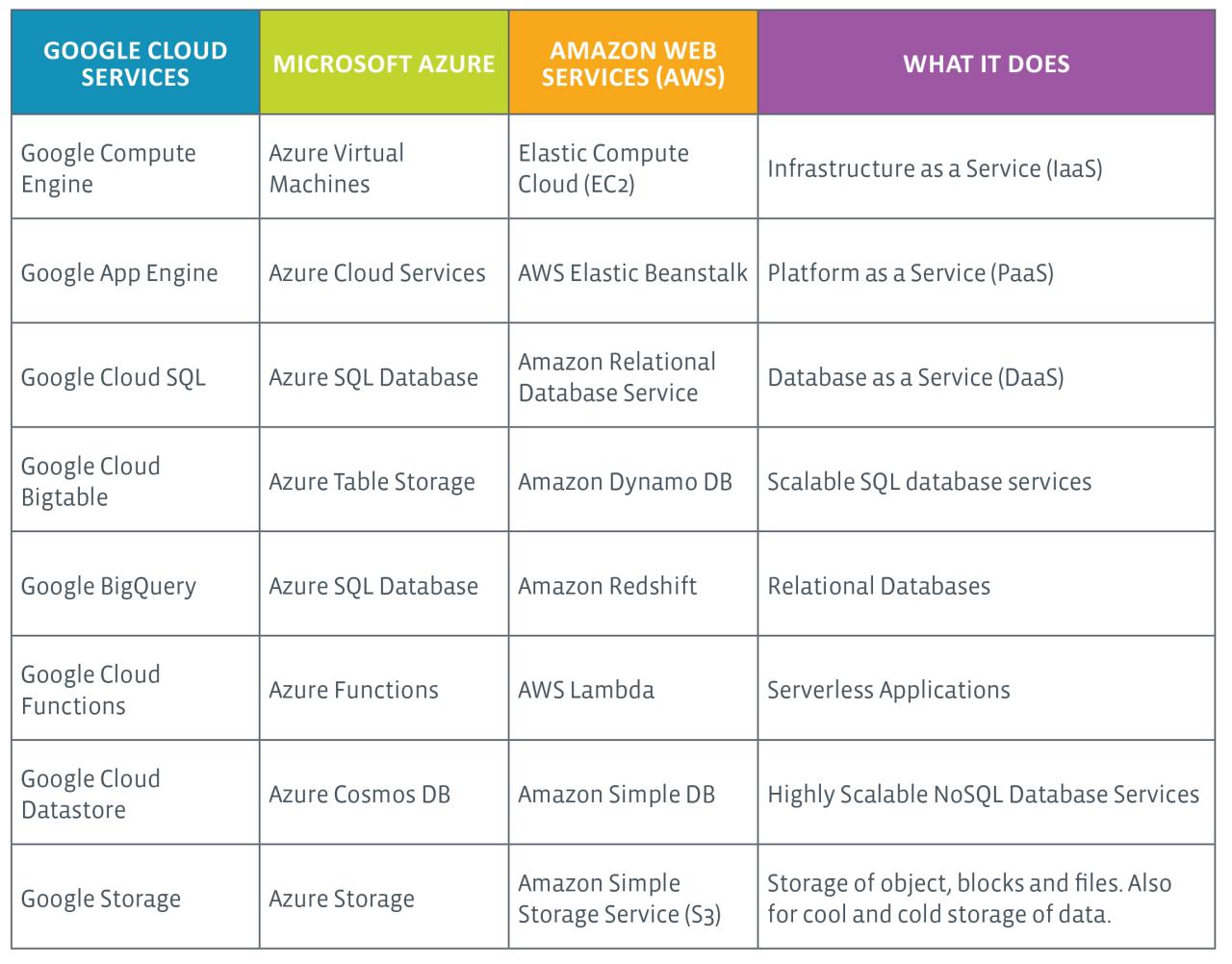

Cloud Server Infrastructure Performance Comparisons

Cloud server infrastructure performance varies across different cloud providers due to factors such as hardware specifications, virtualization technologies, and network connectivity. To compare their performance, we have compiled key metrics in the following table:

Table: Cloud Server Infrastructure Performance Metrics

| Provider | CPU | RAM | Storage | Network |

|---|---|---|---|---|

| AWS | Up to 40 vCPUs | Up to 1 TB | Up to 16 TB NVMe SSD | Up to 100 Gbps |

| Azure | Up to 64 vCPUs | Up to 4 TB | Up to 8 TB NVMe SSD | Up to 200 Gbps |

| GCP | Up to 96 vCPUs | Up to 624 GB | Up to 12 TB NVMe SSD | Up to 100 Gbps |

The table shows that Azure offers the highest CPU and network capabilities, while AWS provides the largest RAM and storage options. GCP strikes a balance between the two, offering high CPU counts and substantial RAM and storage capacities.

Hardware Specifications

Hardware specifications, such as processor type, core count, and clock speed, directly impact CPU performance. Cloud providers use a variety of processors from Intel, AMD, and Arm, each with its own strengths and weaknesses.

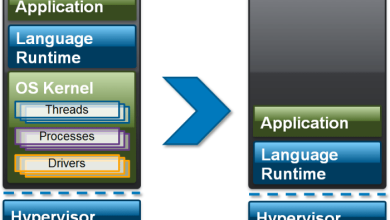

Virtualization Technologies

Virtualization technologies, such as KVM and Xen, allow multiple virtual machines to run on a single physical server. The efficiency and performance of these technologies can vary, affecting the overall performance of cloud servers.

Network Connectivity

Network connectivity is crucial for cloud servers to communicate with each other and the outside world. Cloud providers offer a range of network options, including dedicated connections, virtual private clouds (VPCs), and content delivery networks (CDNs). The speed and reliability of these networks can significantly impact cloud server performance.

Benchmarking Cloud Server Performance

Evaluating the performance of cloud servers is crucial for businesses to make informed decisions about their infrastructure. Benchmarking tools and methodologies provide a standardized way to assess and compare the performance of different cloud providers.

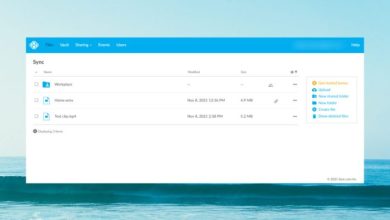

Monitoring cloud server performance across different providers can be a challenge. Cloud diagram server monitoring tools can help you visualize and track key metrics, providing insights into resource utilization, performance bottlenecks, and potential areas for optimization. By leveraging these tools, you can ensure optimal performance and availability of your cloud-based applications and services.

Common benchmarking tools include:

- CloudHarmony: Provides a comprehensive suite of tests for measuring performance metrics like latency, throughput, and CPU utilization.

- TPCx-BB: A benchmark specifically designed for cloud environments, focusing on database performance.

- SPEC Cloud IaaS 2013: A standardized benchmark for measuring the performance of Infrastructure-as-a-Service (IaaS) offerings.

Benchmark Results and Implications

Benchmark results can vary depending on the specific cloud provider, server configuration, and workload. For example, a study by CloudHarmony found that:

- AWS EC2 instances generally performed better than Azure VMs in terms of latency and throughput.

- Google Cloud Compute Engine (GCE) instances had the lowest CPU utilization, indicating better resource efficiency.

- DigitalOcean droplets performed consistently across different workloads, making them a good option for general-purpose applications.

These results have implications for real-world applications. Businesses can use them to identify the cloud provider that best meets their specific performance requirements. For instance, if latency is a critical factor, they may choose AWS EC2. If cost efficiency is a priority, they may consider DigitalOcean droplets.

Optimizing Cloud Server Performance: Cloud Server Performance With Different Cloud Providers

Optimizing cloud server performance is crucial for maximizing the efficiency and cost-effectiveness of your cloud infrastructure. By implementing best practices for resource allocation, workload management, and network configuration, you can ensure that your cloud servers operate at peak performance while minimizing downtime and expenses.

Resource Allocation

Efficient resource allocation is essential for optimizing cloud server performance. This involves matching the size and type of your cloud server to the specific workload it will handle. Consider the following tips:

- Right-size your server:Choose a server with the appropriate CPU, memory, and storage capacity for your workload. Avoid overprovisioning, as this can lead to wasted resources and increased costs.

- Use autoscaling:Implement autoscaling to automatically adjust server resources based on demand. This ensures that your servers have the resources they need during peak usage times without overprovisioning during periods of low demand.

Workload Management

Effective workload management helps distribute tasks efficiently across your cloud servers, preventing bottlenecks and optimizing performance. Consider the following strategies:

- Use load balancing:Implement load balancing to distribute incoming requests across multiple servers, ensuring that no single server becomes overloaded.

- Optimize database performance:Use appropriate database indexing and caching techniques to improve database performance and reduce server load.

Network Configuration, Cloud server performance with different cloud providers

Proper network configuration is crucial for ensuring optimal cloud server performance. Consider the following tips:

- Use a high-performance network:Choose a cloud provider that offers a high-performance network with low latency and high bandwidth.

- Configure network security:Implement appropriate network security measures to protect your servers from unauthorized access and malicious attacks.

Case Studies: Real-World Cloud Server Performance

Real-world case studies provide valuable insights into the performance of cloud servers in practical scenarios. They showcase how different cloud providers and configurations impact application performance, cost, and reliability.

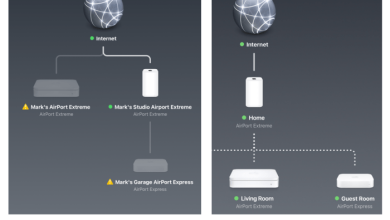

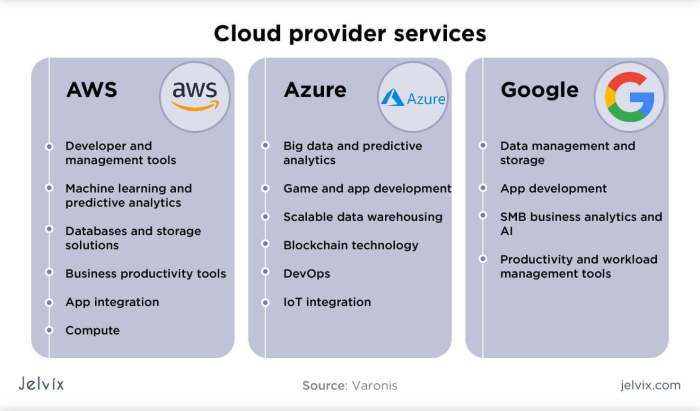

The performance of cloud servers can vary significantly across different cloud providers. To help you make an informed decision, it is recommended to refer to a Cloud diagram server vendor comparison for detailed insights. This comparison can provide valuable information on the strengths and weaknesses of each provider, enabling you to choose the best option for your specific requirements and optimize the performance of your cloud servers.

Factors Influencing Performance

Several factors influence the performance of cloud servers in real-world settings, including:

- Application Requirements:The type of application and its resource requirements can significantly impact performance.

- Workload Patterns:The workload patterns, such as peak usage times and data transfer volumes, affect server performance.

- Cost Considerations:The cost of cloud servers can vary based on the provider, region, and resource allocation.

Emerging Trends in Cloud Server Performance

Cloud server performance is constantly evolving, driven by the adoption of new technologies and the increasing popularity of cloud-native architectures. These trends are shaping the future of cloud server performance, and businesses need to be aware of them in order to make the most of their cloud investments.

One of the most significant emerging trends is the adoption of NVMe storage. NVMe (Non-Volatile Memory Express) is a new type of storage technology that is much faster than traditional hard disk drives (HDDs) and solid-state drives (SSDs). NVMe storage can significantly improve the performance of cloud servers, especially for applications that require fast access to data.

Another emerging trend is the rise of serverless computing. Serverless computing is a cloud computing model in which the cloud provider manages the servers and infrastructure, and the customer only pays for the resources they use. Serverless computing can significantly simplify the process of developing and deploying cloud applications, and it can also help to improve performance by eliminating the need for businesses to manage their own servers.

Finally, the increasing popularity of cloud-native architectures is also having a major impact on cloud server performance. Cloud-native architectures are designed to be deployed in the cloud, and they take advantage of the unique capabilities of cloud computing platforms. Cloud-native architectures can be more scalable, resilient, and performant than traditional architectures, and they can help businesses to get the most out of their cloud investments.

Cloud-native Architectures

Cloud-native architectures are designed to be deployed in the cloud, and they take advantage of the unique capabilities of cloud computing platforms. Cloud-native architectures can be more scalable, resilient, and performant than traditional architectures, and they can help businesses to get the most out of their cloud investments.

- Scalability: Cloud-native architectures can be easily scaled up or down to meet changing demand. This makes them ideal for businesses that experience fluctuations in traffic or that need to quickly provision new resources.

- Resilience: Cloud-native architectures are designed to be resilient to failure. This means that they can continue to operate even if one or more of their components fails.

- Performance: Cloud-native architectures can be optimized for performance. This makes them ideal for businesses that need to run high-performance applications.

Closure

In the ever-evolving landscape of cloud computing, understanding server performance is paramount. This discussion has shed light on the key factors influencing performance, from infrastructure specifications to workload patterns. By leveraging the knowledge gained, you can optimize your cloud deployments, harnessing the full potential of this transformative technology.