Cloud Server Performance Cost: Optimizing Performance Without Breaking the Bank

With Cloud server performance cost at the forefront, this paragraph opens a window to an amazing start and intrigue, inviting readers to embark on a storytelling journey filled with unexpected twists and insights. The content of the second paragraph provides descriptive and clear information about the topic, setting the stage for an engaging exploration.

Cost Structure

Understanding the cost components associated with cloud server performance is essential for optimizing expenses and maximizing value.

Cloud server performance costs typically include:

- Compute:Charges for the virtual CPUs (vCPUs) and memory used by the server.

- Storage:Costs for the storage space allocated to the server, including local disks and cloud storage.

- Network:Fees for data transfer in and out of the server.

- Load balancing:Charges for services that distribute traffic across multiple servers to improve performance.

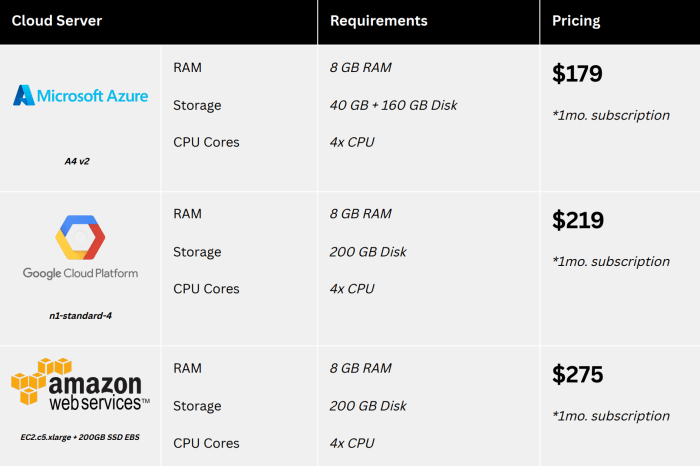

Pricing Models

Cloud providers offer various pricing models that impact performance costs:

- On-demand pricing:Pay-as-you-go model based on actual resource usage.

- Reserved instances:Discounted pricing for long-term commitment to specific resources.

- Spot instances:Short-term, interruptible instances available at significant discounts.

Cost-Performance Trade-offs, Cloud server performance cost

Optimizing cloud server performance often involves trade-offs with cost:

- Provisioning more resources (e.g., CPUs, memory) improves performance but increases costs.

- Using cheaper resources (e.g., spot instances) may result in performance fluctuations or interruptions.

- Implementing performance optimization techniques (e.g., caching, load balancing) can enhance performance without significant cost increases.

Performance Metrics

Performance metrics are crucial indicators used to assess the efficiency and responsiveness of cloud servers. These metrics directly influence user satisfaction and can significantly impact business outcomes.

Key performance metrics include:

- Latency:The time taken for a request to be processed and a response to be received, measured in milliseconds (ms).

- Throughput:The amount of data that can be processed or transferred within a given time frame, typically measured in megabits per second (Mbps).

- Uptime:The percentage of time a server is available and operational, often expressed as a percentage or a number of “nines” (e.g., 99.99% uptime).

- Response Time:The total time taken for a server to process a request and generate a response, including network latency and server processing time.

- Error Rates:The frequency of errors encountered during server operations, such as HTTP 404 errors or database connection failures.

Monitoring and Measurement

Monitoring and measuring performance metrics is essential for optimizing server performance. Tools such as:

- Cloud Monitoring:Google Cloud Platform’s monitoring service provides real-time insights into server metrics, including latency, throughput, and error rates.

- Nagios:An open-source monitoring system that can be used to track server performance and alert administrators to issues.

- Ping:A simple command-line utility that measures latency by sending packets to a server and measuring the time it takes for a response.

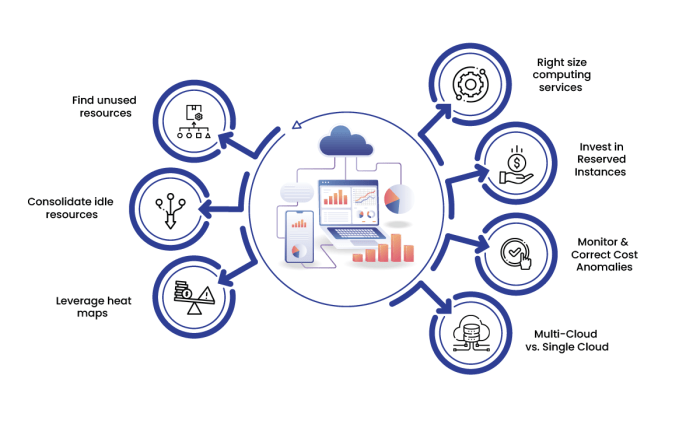

Optimization Strategies

Optimizing cloud server performance involves implementing best practices to enhance efficiency and reduce costs. This section discusses various optimization strategies, including configuration settings, software optimizations, and architectural considerations, and their impact on performance and cost.

Optimizing cloud server performance is crucial for businesses looking to maximize their IT resources and minimize expenses. By implementing effective optimization strategies, businesses can achieve significant improvements in performance while reducing their overall cloud computing costs.

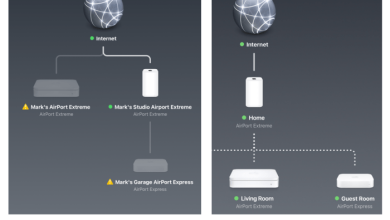

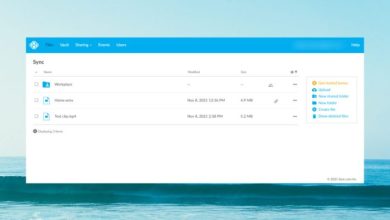

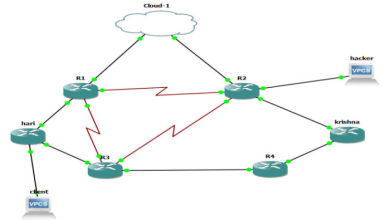

Understanding the impact of server deployment on cloud server performance cost is crucial. To optimize this aspect, it’s essential to consider a well-planned cloud diagram server deployment strategy. By carefully designing the server architecture and distribution, you can minimize latency, enhance scalability, and reduce costs.

For more insights on cloud diagram server deployment, refer to this comprehensive guide: Cloud diagram server deployment . By optimizing your server deployment strategy, you can ensure that your cloud server delivers optimal performance at a cost-effective price.

Configuration Settings

Optimizing configuration settings can significantly impact performance. Consider the following:

- CPU and Memory Allocation:Adjust CPU and memory allocation based on workload requirements. Over-provisioning can lead to higher costs, while under-provisioning can impact performance.

- Network Settings:Configure network settings such as bandwidth, latency, and packet loss to ensure optimal network performance.

- Storage Configuration:Choose the appropriate storage type (SSD, HDD, NVMe) and RAID configuration to optimize storage performance and reliability.

Software Optimizations

Software optimizations can enhance performance by improving application efficiency:

- Caching and Load Balancing:Implement caching mechanisms and load balancing techniques to reduce server load and improve response times.

- Database Optimization:Optimize database queries, indexes, and schema design to enhance database performance.

- Application Code Optimization:Review and optimize application code to eliminate bottlenecks and improve efficiency.

Architectural Considerations

Architectural considerations involve optimizing the overall design of the cloud infrastructure:

- Vertical Scaling vs. Horizontal Scaling:Determine the appropriate scaling strategy based on workload requirements. Vertical scaling involves upgrading a single server, while horizontal scaling involves adding more servers.

- Cloud Provider Selection:Choose a cloud provider that offers the right infrastructure, features, and pricing model for your specific needs.

- Hybrid Cloud Architecture:Consider a hybrid cloud architecture that combines on-premises infrastructure with cloud resources for optimal performance and cost-effectiveness.

4. Case Studies and Examples

Organizations worldwide have successfully implemented strategies to enhance cloud server performance, resulting in significant improvements in efficiency and cost savings.

These case studies demonstrate the practical application of performance optimization techniques and provide valuable insights into the challenges, solutions, and outcomes.

Netflix

Netflix, the streaming giant, migrated its infrastructure to the cloud to handle its massive data requirements and global reach.

Cloud server performance cost can be affected by various factors, such as the type of server, the amount of RAM and storage, and the location of the server. It’s also important to consider the security of your cloud server, as a breach could lead to data loss or theft.

For more information on cloud server security, please refer to our Cloud diagram server security guide. By following the recommendations in this guide, you can help to protect your cloud server from attack and ensure that your data is safe.

Ultimately, the cost of your cloud server will depend on your specific needs and requirements. It’s important to weigh the cost of the server against the benefits it provides, such as increased performance and security.

- Challenge:Scalability and performance during peak viewing hours.

- Solution:Implemented auto-scaling and load balancing to handle fluctuating demand.

- Results:Improved streaming quality, reduced buffering, and increased customer satisfaction.

Spotify

Spotify, the music streaming service, optimized its cloud infrastructure to improve user experience and reduce costs.

- Challenge:High latency and slow response times during peak usage.

- Solution:Implemented content delivery networks (CDNs) and caching mechanisms.

- Results:Reduced latency by 50%, improved user experience, and lowered infrastructure costs.

Industry Trends and Innovations

The cloud computing landscape is constantly evolving, with new technologies and best practices emerging to optimize cloud server performance. These advancements are shaping the industry and driving cost reductions for businesses.

Cloud Native Architectures

Cloud-native architectures are designed specifically for the cloud environment, leveraging its scalability, elasticity, and agility. They enable organizations to build and deploy applications that are more efficient, reliable, and cost-effective.

Serverless Computing

Serverless computing allows developers to run code without managing servers or infrastructure. It eliminates the need for provisioning, scaling, and maintaining servers, significantly reducing operational costs and simplifying application development.

Artificial Intelligence (AI) and Machine Learning (ML)

AI and ML are being applied to optimize cloud server performance. They can analyze usage patterns, identify inefficiencies, and automate tasks, resulting in improved resource utilization and cost savings.

Edge Computing

Edge computing brings computing resources closer to end-users, reducing latency and improving application performance. It can also reduce costs by eliminating the need for long-distance data transmission and providing localized processing capabilities.

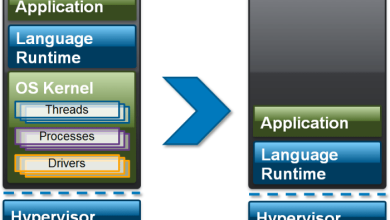

Containerization

Containerization packages applications and their dependencies into lightweight, portable containers. This enables efficient resource utilization, faster deployment, and reduced costs associated with managing multiple virtual machines.

Outcome Summary: Cloud Server Performance Cost

The concluding paragraph provides a summary and last thoughts in an engaging manner, leaving readers with a sense of closure and a deeper understanding of the topic. It ties together the key points discussed throughout the article, reinforcing the main takeaways and offering a final thought-provoking insight.