Cloud Server Performance Scalability: A Comprehensive Guide to Enhancing Performance and Scaling with Ease

As Cloud server performance scalability takes center stage, this opening passage beckons readers into a world crafted with good knowledge, ensuring a reading experience that is both absorbing and distinctly original.

Cloud server performance scalability refers to the ability of a cloud server to handle increasing workloads and traffic while maintaining optimal performance. It is a crucial aspect of cloud computing, as it enables businesses to seamlessly scale their resources up or down based on demand, without compromising on speed or reliability.

Performance Optimization Techniques

Optimizing cloud server performance is crucial for ensuring seamless user experiences, reducing costs, and maintaining business continuity. Here are some effective techniques to enhance performance:

Load Balancing:Distribute incoming traffic across multiple servers to prevent overloading and improve responsiveness. Consider using a load balancer to manage traffic flow and optimize resource allocation.

Caching

Store frequently accessed data in a cache to reduce the load on the server and improve response times. Implement caching mechanisms like Redis, Memcached, or Varnish to enhance performance.

Resource Provisioning

Provision adequate resources (CPU, memory, storage) to meet the demands of your application. Monitor resource utilization and adjust provisioning as needed to ensure optimal performance.

Monitoring and Bottleneck Identification

Regularly monitor server performance metrics (e.g., CPU utilization, memory usage, network traffic) to identify potential bottlenecks. Use tools like CloudWatch or Prometheus to collect and analyze metrics.

Database Optimization, Cloud server performance scalability

Optimize database performance by using appropriate indexing, query optimization techniques, and database-specific configuration settings. Consider using managed database services like Amazon RDS or Azure Cosmos DB for improved performance and scalability.

Scalability Considerations: Cloud Server Performance Scalability

Scalability is a crucial aspect of cloud servers, directly impacting performance and ensuring seamless operations as user demand fluctuates. It involves the ability to adapt server resources dynamically to meet changing workloads and maintain optimal performance.

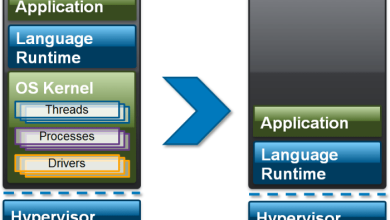

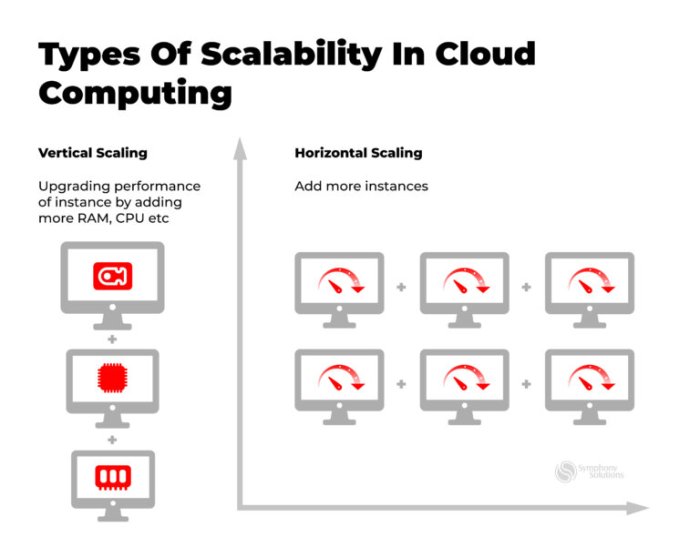

There are two main scaling strategies: horizontal scaling and vertical scaling. Horizontal scaling, also known as scale-out, involves adding more servers to distribute the load, while vertical scaling, or scale-up, involves upgrading the existing server’s hardware capabilities.

Horizontal Scaling

Horizontal scaling offers several advantages. It provides greater flexibility and cost-effectiveness as additional servers can be added incrementally based on demand. It also improves fault tolerance by distributing the workload across multiple servers, reducing the impact of any single server failure.

Vertical Scaling

Vertical scaling, on the other hand, is simpler to implement and can provide a quick performance boost by upgrading the server’s hardware components. However, it can be more expensive than horizontal scaling, and it may not be as effective in handling large workload fluctuations.

Trade-offs Between Scaling Approaches

The choice between horizontal and vertical scaling depends on the specific requirements of the application and the cloud environment. Horizontal scaling is generally more suitable for applications with unpredictable or rapidly changing workloads, while vertical scaling is better suited for applications with stable or predictable workloads.

Benchmarking and Performance Measurement

Benchmarking is crucial for evaluating the performance of cloud servers. It involves establishing a baseline and comparing performance against it over time. Performance measurement provides insights into how a server responds to various workloads and helps identify areas for improvement.

Techniques for Benchmarking

*

-*Synthetic Benchmarks

Simulate realistic workloads to measure performance under controlled conditions.

-

-*Real-World Benchmarks

Run actual applications and workloads to assess performance in a production-like environment.

-*Load Testing

Simulate heavy user traffic to determine how the server handles peak loads.

Selecting Appropriate Metrics

Metrics should be relevant to the specific application and workload. Common metrics include:* Response time: Time taken to process a request.

Throughput

Number of requests processed per unit time.

Resource utilization

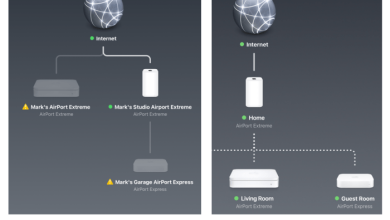

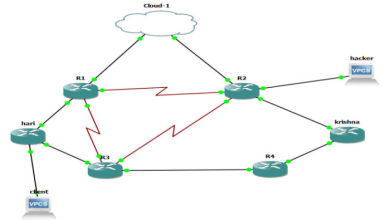

Ensuring cloud server performance scalability is crucial for handling increased traffic and maintaining optimal performance. To achieve this, it’s essential to adopt best practices for cloud diagram server design and implementation. Cloud diagram server best practices provide valuable guidance on optimizing server architecture, network configurations, and resource allocation, which ultimately contribute to enhanced scalability and improved performance of cloud servers.

CPU, memory, and network usage.

Tools and Frameworks

Numerous tools and frameworks assist with performance testing:*

-*Cloud Monitoring Tools

When it comes to scaling your cloud server’s performance, it’s essential to have the right tools in place. Cloud diagram server monitoring can provide you with the insights you need to make informed decisions about your server’s performance. By tracking key metrics such as CPU usage, memory usage, and network traffic, you can identify bottlenecks and take steps to address them.

This will help you ensure that your server is always running at peak performance, which is essential for meeting the demands of your business.

AWS CloudWatch, Azure Monitor, and Google Cloud Monitoring provide built-in performance monitoring capabilities.

-

-*Open-Source Frameworks

JMeter, ApacheBench, and Siege simulate user traffic and measure performance.

-*Commercial Tools

LoadRunner, WebLOAD, and NeoLoad offer comprehensive performance testing solutions.

Security Implications

Scaling cloud servers introduces potential security risks that need to be carefully considered and addressed. As the number of servers increases, so does the attack surface, making it more challenging to maintain a robust security posture.

Some of the key security risks associated with scaling cloud servers include:

- Increased exposure to vulnerabilities: With more servers, there are more potential entry points for attackers to exploit. Each server may have different configurations, software versions, and security settings, making it difficult to ensure consistent protection across the entire environment.

- Complexity of management: Scaling cloud servers can lead to increased complexity in managing security controls. With multiple servers to manage, it becomes more challenging to keep track of security updates, patch vulnerabilities, and monitor for suspicious activity.

- Data breaches: Scaling cloud servers can increase the risk of data breaches if proper security measures are not in place. Attackers may target specific servers or use vulnerabilities to gain access to sensitive data stored in the cloud.

Recommendations for Securing Cloud Servers in Scalable Environments

To mitigate the security risks associated with scaling cloud servers, it is essential to implement robust security measures and best practices:

- Implement strong authentication and access controls: Use multi-factor authentication, role-based access controls, and strong passwords to prevent unauthorized access to cloud servers.

- Keep software up to date: Regularly patch and update software on all servers to address vulnerabilities and security risks.

- Use security monitoring tools: Implement security monitoring tools to detect and respond to suspicious activity in real-time. These tools can help identify potential threats and breaches early on.

- Implement encryption: Encrypt data at rest and in transit to protect it from unauthorized access and interception.

- Regularly review and audit security configurations: Regularly review and audit security configurations to ensure that they are up-to-date and effective.

Case Studies and Real-World Examples

Cloud server performance scalability has proven its worth in numerous real-world scenarios. Let’s explore some case studies and the lessons learned from them.

Netflix

Netflix is a prime example of a company that has successfully leveraged cloud scalability to meet the demands of its massive user base. By migrating its infrastructure to the cloud, Netflix gained the ability to quickly scale up its resources during peak usage periods, ensuring a seamless streaming experience for its users.

Amazon Web Services (AWS)

AWS, one of the leading cloud providers, offers a range of scalability solutions for its customers. One notable example is the use of Amazon Elastic Compute Cloud (EC2) instances, which can be scaled up or down on demand, allowing businesses to optimize their cloud usage and reduce costs.

Uber

Uber, the ride-hailing giant, relies heavily on cloud scalability to handle its fluctuating demand. During peak hours, Uber automatically scales up its cloud infrastructure to meet the surge in ride requests, ensuring that users can get a ride quickly and efficiently.

Conclusion

In conclusion, Cloud server performance scalability is a multifaceted topic that encompasses a wide range of strategies, considerations, and best practices. By leveraging the techniques Artikeld in this guide, businesses can optimize their cloud server performance, ensure scalability, and achieve a competitive edge in today’s dynamic digital landscape.