Cloud Server Performance Across Diverse Workloads

Exploring Cloud server performance with different workloads, this discourse delves into the intricate relationship between server configurations and workload demands. By examining key factors such as CPU, memory, and storage, we unravel the impact of workload types on server performance, empowering readers to optimize their cloud infrastructure for maximum efficiency.

As we navigate the complexities of cloud server optimization, we’ll uncover strategies for tailoring server configurations to specific workloads, ensuring optimal performance for each unique application. Through real-world case studies, we’ll showcase how organizations have successfully harnessed the power of cloud servers to handle diverse workloads, gleaning valuable insights into the factors that drive success.

Performance Benchmarks for Cloud Servers: Cloud Server Performance With Different Workloads

Cloud server performance is a crucial factor for businesses looking to host their applications and data in the cloud. Various cloud service providers offer different server configurations and pricing models, making it essential to understand how these factors impact performance.

Key Factors Influencing Server Performance, Cloud server performance with different workloads

The performance of a cloud server is primarily determined by the following factors:

- CPU:The number of cores and clock speed of the processor determine the server’s processing power.

- Memory (RAM):The amount of memory available affects the server’s ability to handle multiple tasks simultaneously.

- Storage:The type and size of storage (e.g., SSD vs. HDD) impact the server’s data access speed and capacity.

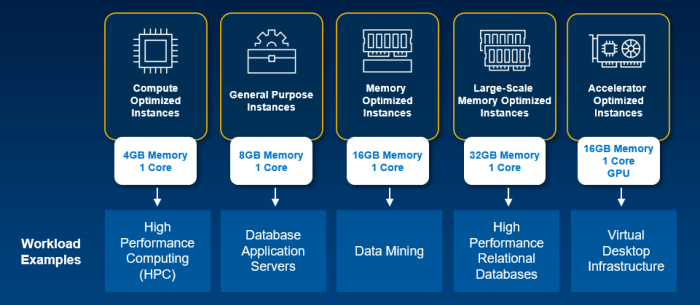

Workload Types and Server Performance

The type of workload running on a server also influences its performance. Some common workload types include:

- Web hosting:Serving static and dynamic web content requires servers with high bandwidth and fast storage.

- Database workloads:Managing large databases requires servers with ample memory and fast storage.

- Virtualization:Hosting multiple virtual machines on a single server demands high CPU and memory resources.

Understanding these factors and workload types is essential for selecting the optimal cloud server configuration that meets specific performance requirements.

Optimizing Cloud Server Performance for Different Workloads

Optimizing cloud server performance is essential for ensuring optimal application and service delivery. Different workloads have varying resource demands, and it’s crucial to tailor server configurations to meet specific requirements. This involves adjusting CPU and memory allocation, optimizing storage and network configurations, and implementing effective monitoring and management strategies.

Strategies for Optimizing Server Configurations

To optimize server configurations for different workloads, consider the following strategies:

- CPU Allocation:Determine the appropriate number of CPU cores and threads required for the workload. Consider factors such as workload intensity, peak usage patterns, and future growth.

- Memory Allocation:Allocate sufficient memory to handle the workload’s data requirements. Monitor memory usage and adjust allocations as needed to prevent performance bottlenecks.

- Storage Optimization:Choose the appropriate storage type (e.g., HDD, SSD, NVMe) based on workload requirements. Consider factors such as read/write speed, IOPS, and data durability.

- Network Configuration:Optimize network settings to ensure efficient data transfer. Consider factors such as network bandwidth, latency, and security requirements.

Best Practices for Server Performance Management

To effectively manage and monitor server performance, follow these best practices:

- Regular Monitoring:Continuously monitor server metrics such as CPU utilization, memory usage, disk I/O, and network traffic to identify potential issues.

- Performance Profiling:Use tools to profile server performance and identify bottlenecks or areas for improvement.

- Automated Alerts:Set up alerts to notify administrators of performance issues or resource constraints.

- Regular Maintenance:Perform regular maintenance tasks such as software updates, security patches, and hardware upgrades to maintain optimal performance.

Case Studies of Cloud Server Performance in Real-World Applications

Cloud servers have become increasingly popular for handling a wide range of workloads. They offer scalability, flexibility, and cost-effectiveness, making them a compelling choice for businesses of all sizes. In this section, we will share case studies that demonstrate how cloud servers have been used to handle different workloads effectively.

We will analyze the performance metrics achieved and discuss the factors that contributed to success. We will also identify challenges encountered and solutions implemented. These case studies will provide valuable insights into the real-world performance of cloud servers and help you make informed decisions about using them for your own applications.

E-commerce Platform on AWS

An e-commerce platform migrated its website and applications to AWS to improve scalability and performance. The platform experienced a significant increase in traffic during peak shopping seasons, which often led to slowdowns and outages. By migrating to AWS, the platform was able to scale its infrastructure up and down as needed, ensuring that its website and applications remained available and responsive even during periods of high demand.

The platform also implemented a number of performance optimization techniques, such as using a content delivery network (CDN) to cache static content and using a load balancer to distribute traffic across multiple servers. As a result of these optimizations, the platform was able to achieve a significant improvement in performance, with page load times decreasing by an average of 50%. This led to a noticeable increase in customer satisfaction and sales.

Emerging Trends in Cloud Server Performance Optimization

The realm of cloud server performance optimization is continuously evolving, driven by advancements in hardware, software, and cloud architecture. These emerging trends are shaping the future of server performance, offering businesses and organizations the potential to enhance their cloud infrastructure and maximize application efficiency.

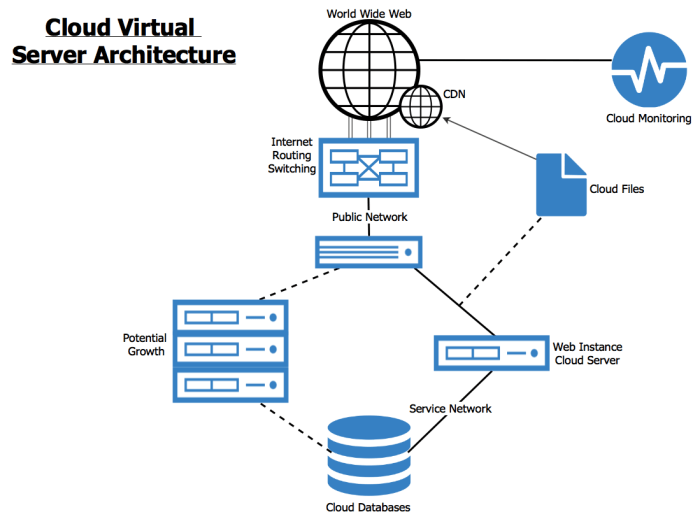

Understanding how cloud server performance varies with different workloads is crucial for optimizing cloud infrastructure. For a deeper dive into server architecture and performance, we recommend exploring our comprehensive Cloud diagram server white papers . These white papers provide valuable insights into server configurations, workload management, and best practices for maximizing performance in various cloud environments.

By leveraging this knowledge, you can tailor your cloud architecture to meet the specific demands of your applications and ensure optimal performance.

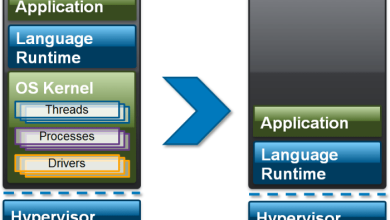

One significant trend is the adoption of cloud-native technologies, such as containers and serverless computing. These technologies enable developers to build and deploy applications more quickly and efficiently, while also reducing the overhead associated with traditional server management. By leveraging cloud-native technologies, organizations can optimize their cloud server performance by reducing resource consumption and improving scalability.

Cloud server performance with different workloads can vary significantly. For a comprehensive understanding of how server resources are allocated and utilized, refer to our in-depth analysis on Cloud diagram server resources . This resource provides a visual representation of the underlying infrastructure and explains how it affects performance.

By understanding these concepts, you can optimize your cloud deployments for maximum efficiency and cost-effectiveness.

Hardware Advancements

The continuous evolution of hardware is another key trend driving cloud server performance optimization. The introduction of new processor architectures, faster memory technologies, and high-speed networking capabilities is enabling cloud providers to offer more powerful and efficient servers. These hardware advancements provide the foundation for improved application performance, reduced latency, and increased throughput.

Software Innovations

Software innovations are also playing a crucial role in optimizing cloud server performance. The development of new operating systems, virtualization technologies, and performance monitoring tools is empowering businesses to fine-tune their cloud infrastructure and identify areas for improvement. By leveraging these software advancements, organizations can gain greater control over their cloud resources and optimize them for specific workloads and applications.

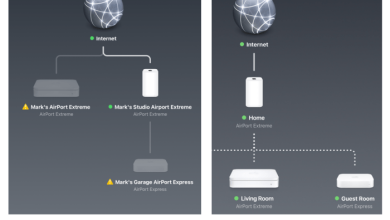

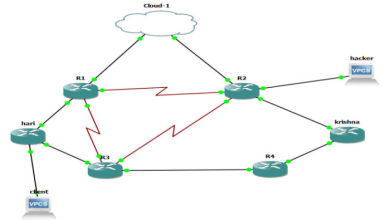

Cloud Architecture Evolution

The evolution of cloud architecture is another emerging trend that is shaping the future of server performance optimization. The adoption of hybrid and multi-cloud strategies is enabling organizations to distribute their workloads across multiple cloud providers and on-premises infrastructure. This approach allows businesses to optimize performance by leveraging the strengths of different cloud platforms and tailoring their infrastructure to specific application requirements.

Best Practices for Cloud Server Performance Management

Optimizing cloud server performance requires proactive monitoring and management. By implementing best practices, organizations can ensure their cloud servers deliver consistent performance and meet the demands of their applications and workloads.

Effective cloud server performance management involves establishing clear guidelines, utilizing monitoring tools, and setting up performance alerts and escalation procedures.

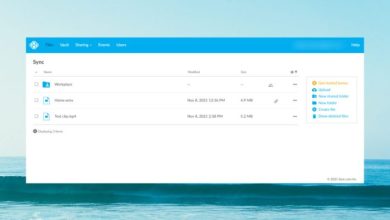

Monitoring and Management Guidelines

Establishing clear guidelines for monitoring and managing cloud server performance is crucial. These guidelines should define:

- Key performance indicators (KPIs) to be monitored, such as CPU utilization, memory usage, and network latency

- Thresholds for performance metrics and actions to be taken when thresholds are exceeded

- Roles and responsibilities for monitoring and managing performance

- Regular performance reviews and optimization strategies

Tools and Techniques

Various tools and techniques can be used to identify and resolve performance issues. These include:

- Cloud monitoring services provided by cloud providers

- Third-party monitoring tools

- Performance profiling tools

- Load testing tools

Performance Alerts and Escalation Procedures

Performance alerts notify administrators when performance metrics exceed predefined thresholds. Escalation procedures define the steps to be taken when performance issues occur, including:

- Automated actions, such as scaling up resources or restarting services

- Notifications to administrators via email, SMS, or other channels

- Escalation to higher levels of support if the issue cannot be resolved promptly

Final Summary

In conclusion, understanding Cloud server performance with different workloads is paramount for organizations seeking to maximize their cloud infrastructure investment. By adopting a data-driven approach to server optimization, businesses can ensure that their cloud servers are tailored to meet the demands of their specific workloads, resulting in enhanced performance, cost efficiency, and a competitive edge in today’s digital landscape.