How to Measure Cloud Server Performance: A Comprehensive Guide

How to measure cloud server performance – Delving into the realm of cloud server performance measurement, this comprehensive guide unravels the intricacies of monitoring, analyzing, and optimizing your cloud infrastructure. Join us as we embark on a journey to ensure your cloud servers deliver unparalleled performance and efficiency.

Cloud server performance is paramount to the success of any cloud-based application or service. With the right metrics and tools, you can gain valuable insights into your cloud environment, identify bottlenecks, and optimize your resources for maximum efficiency. This guide will equip you with the knowledge and strategies to measure, analyze, and improve the performance of your cloud servers.

Key Performance Indicators (KPIs) for Cloud Server Performance

Measuring cloud server performance is crucial for optimizing resource utilization, ensuring application reliability, and meeting business objectives. Identifying relevant Key Performance Indicators (KPIs) is the cornerstone of effective performance monitoring. These metrics provide quantitative data that helps administrators assess the efficiency, stability, and overall health of cloud servers.

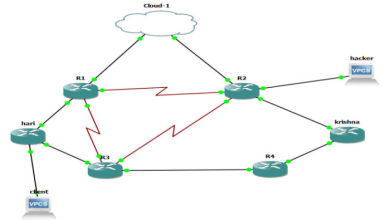

For cloud server performance measurement, it’s crucial to consider various metrics such as latency, throughput, and resource utilization. Analyzing these metrics helps optimize server performance and ensure seamless operations. For further insights, you can refer to Cloud diagram server case studies that provide detailed examples and best practices.

These case studies showcase real-world scenarios and offer valuable guidance for effective cloud server performance measurement and optimization.

KPI Selection

Selecting appropriate KPIs depends on specific business objectives. For instance, e-commerce platforms may prioritize KPIs related to website response time and transaction success rates, while data analytics firms may focus on KPIs measuring data processing throughput and latency.

Common KPIs

Commonly used KPIs for cloud server performance include:

- CPU Utilization:Percentage of CPU resources consumed over a period.

- Memory Utilization:Percentage of RAM used compared to total available memory.

- Network Bandwidth:Amount of data transferred over the network, both inbound and outbound.

- Disk I/O:Number of read and write operations performed on storage devices.

- Response Time:Time taken for a server to respond to requests, typically measured in milliseconds.

- Uptime:Percentage of time the server is operational and available to users.

Monitoring Tools and Techniques

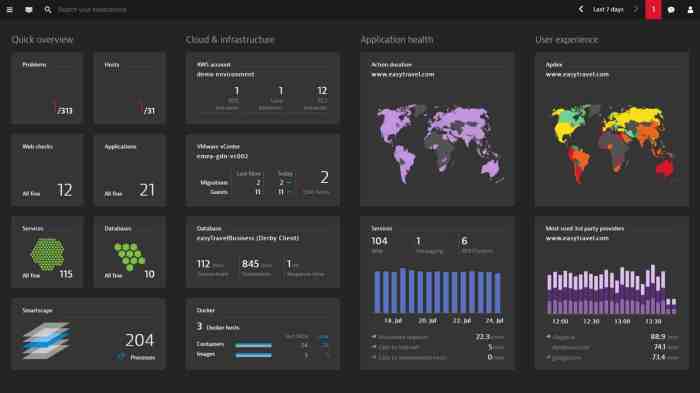

Monitoring cloud server performance is crucial for maintaining optimal functionality and identifying areas for improvement. Various tools and techniques are available to assist in this process.

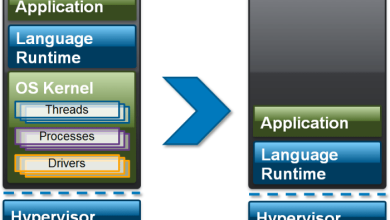

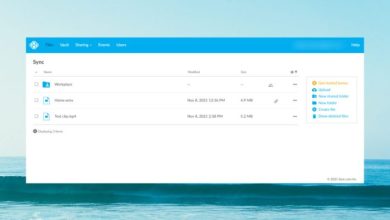

Measuring cloud server performance is crucial for optimizing resource allocation and ensuring smooth operations. By analyzing metrics such as CPU utilization, memory usage, and network bandwidth, you can identify bottlenecks and improve performance. For a visual representation of server resources, refer to the Cloud diagram server resources guide.

This diagram provides an in-depth understanding of how different components interact and contribute to overall server performance. By combining these insights, you can effectively measure and enhance the performance of your cloud servers.

When selecting monitoring tools, it’s essential to consider factors such as the scale of your infrastructure, the specific metrics you want to track, and your budget.

Monitoring Tools

- Cloud Monitoring Tools:These tools are designed specifically for monitoring cloud environments and offer features such as automated metric collection, real-time monitoring, and anomaly detection.

- Open-Source Monitoring Tools:These tools are free to use and provide flexibility in terms of customization and integration. Examples include Nagios, Zabbix, and Prometheus.

- Commercial Monitoring Tools:These tools offer a wide range of features, including comprehensive monitoring capabilities, advanced analytics, and enterprise-grade support.

Techniques

- Metric Monitoring:This involves tracking key performance indicators (KPIs) such as CPU utilization, memory usage, and network throughput.

- Log Monitoring:This technique involves analyzing server logs to identify errors, performance issues, and security events.

- Synthetic Monitoring:This technique simulates user interactions to monitor the performance of applications and services from an end-user perspective.

Performance Metrics and Analysis

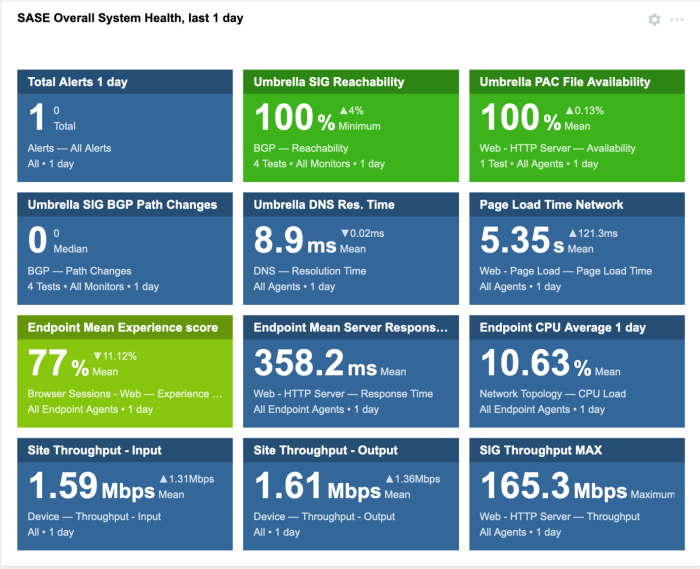

Measuring and analyzing cloud server performance is crucial for optimizing resource utilization, identifying bottlenecks, and ensuring optimal user experience. Various performance metrics provide insights into different aspects of server performance, enabling proactive monitoring and timely intervention.

Performance Metrics

Key performance metrics collected from cloud servers include:

- CPU Utilization:Percentage of CPU time consumed by processes.

- Memory Utilization:Amount of physical memory in use.

- Disk I/O:Read and write operations per second on storage devices.

- Network I/O:Incoming and outgoing traffic volume.

- Response Time:Time taken to process requests.

- Uptime:Percentage of time the server is operational.

- Error Rate:Frequency of server errors.

Analysis Techniques

Performance data analysis involves identifying trends, patterns, and anomalies. Techniques used include:

- Trend Analysis:Monitoring metrics over time to identify gradual changes or performance degradation.

- Comparative Analysis:Comparing metrics across different servers or time periods to identify performance gaps.

- Threshold Monitoring:Setting thresholds for key metrics and triggering alerts when exceeded.

- Bottleneck Identification:Isolating performance bottlenecks by analyzing resource utilization and identifying points of congestion.

Performance Baselines and Trends

Establishing performance baselines is essential for evaluating performance changes. Baselines are typically set during initial server configuration or after performance optimization. Tracking performance trends over time helps identify performance degradation or improvements, enabling proactive adjustments.

Capacity Planning and Optimization: How To Measure Cloud Server Performance

Capacity planning and optimization are crucial for ensuring the optimal performance of cloud servers. By proactively assessing the resource requirements and adjusting the server configuration accordingly, businesses can avoid performance bottlenecks, reduce costs, and enhance the overall efficiency of their cloud infrastructure.

To effectively plan and optimize cloud server capacity, several factors need to be considered, including the workload characteristics, traffic patterns, peak usage times, and growth projections. Based on these factors, businesses can determine the optimal server size and configuration, ensuring that the server has sufficient resources to handle the expected workload while avoiding overprovisioning and unnecessary expenses.

Scaling Cloud Servers

As cloud workloads evolve and demands fluctuate, it becomes necessary to scale cloud servers to meet changing requirements. Scaling involves adjusting the server’s resources, such as CPU, memory, and storage, to match the current demand. This ensures that the server has the capacity to handle peak loads without compromising performance and avoids underutilization during periods of low demand.

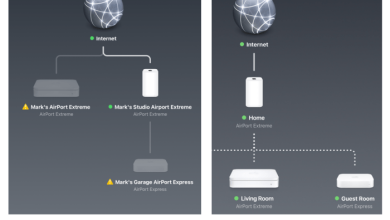

Cloud providers offer various scaling options, including:

- Vertical Scaling (Scale Up/Down):Adjusting the resources of an existing server by increasing or decreasing its CPU, memory, and storage capacity.

- Horizontal Scaling (Scale Out/In):Adding or removing servers to the infrastructure to distribute the workload across multiple servers.

- Autoscaling:Configuring the cloud platform to automatically adjust server resources based on predefined rules and metrics, ensuring optimal performance and resource utilization.

Benchmarking and Best Practices

Benchmarking and best practices play a vital role in optimizing cloud server performance. By understanding industry standards and comparing performance against competitors, businesses can identify areas for improvement and implement effective strategies.

Best Practices

Industry best practices for cloud server performance management include:

- Monitoring key performance indicators (KPIs): Regularly track metrics such as CPU utilization, memory usage, network latency, and response times to identify potential bottlenecks.

- Implementing auto-scaling: Automatically adjust server resources based on demand to prevent performance degradation during peak usage.

- Optimizing cloud architecture: Design and configure cloud servers to maximize performance, such as using appropriate instance types, storage options, and network configurations.

- Leveraging cloud-native tools: Utilize cloud-provided tools and services to monitor, manage, and optimize server performance.

- Conducting regular performance audits: Periodically evaluate server performance and identify areas for improvement, such as optimizing code or reducing unnecessary processes.

Case Studies

Successful cloud server performance optimization case studies demonstrate the benefits of implementing best practices. For example, Netflix significantly improved video streaming performance by migrating to a cloud-based architecture and optimizing server configurations.

Benchmarking, How to measure cloud server performance

Benchmarking against industry standards and competitors provides valuable insights into server performance. It helps businesses identify areas where their performance lags and set realistic goals for improvement. Benchmarking can also be used to compare different cloud providers and select the best option for specific performance requirements.

Ultimate Conclusion

Mastering the art of cloud server performance measurement empowers you to make informed decisions, optimize your infrastructure, and deliver exceptional user experiences. By following the best practices Artikeld in this guide, you can ensure that your cloud servers perform at their peak, driving business growth and customer satisfaction.