Cloud Server Performance Issues: Enhance Efficiency and Optimize Performance

Cloud server performance issues can significantly impact the efficiency and user experience of any online application. Understanding and addressing these issues is crucial for maintaining optimal performance and ensuring a seamless digital experience. This comprehensive guide delves into the key aspects of cloud server performance, providing insights and strategies to optimize your infrastructure for maximum efficiency.

Resource Utilization Analysis: Cloud Server Performance Issues

Resource utilization is a crucial aspect of server performance. It refers to the extent to which a server’s resources, such as CPU, memory, and disk space, are being utilized. Excessive resource utilization can lead to performance degradation, bottlenecks, and potential downtime.

To monitor resource utilization, various metrics can be tracked, including CPU utilization, memory utilization, disk I/O utilization, and network utilization. These metrics can be monitored using tools such as monitoring agents, performance monitoring tools, and operating system utilities.

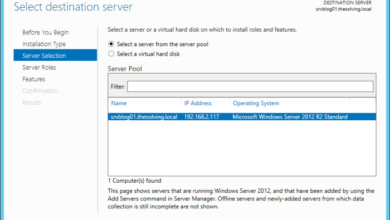

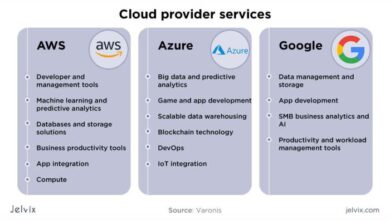

Cloud server performance issues can be frustrating and time-consuming to resolve. If you’re experiencing performance problems with your cloud server, it’s important to compare different cloud diagram server vendors to find one that meets your specific needs. Cloud diagram server vendor comparison can help you make an informed decision about which vendor is right for you.

Once you’ve chosen a vendor, you can start troubleshooting your performance issues and get your server back up and running smoothly.

Optimizing Resource Utilization

Optimizing resource utilization is essential for improving server performance. Several strategies can be employed to achieve this:

- Right-sizing:Ensuring that the server has the appropriate amount of resources to meet its workload requirements.

- Workload Management:Distributing workload across multiple servers to prevent overloading any single server.

- Resource Monitoring and Alerting:Continuously monitoring resource utilization and setting up alerts to identify potential issues early on.

- Auto-scaling:Automatically adjusting the server’s resources based on real-time demand.

- Resource Isolation:Isolating different workloads on the same server using virtualization or containers to prevent resource contention.

Network Performance Assessment

Network performance is crucial for cloud server operations as it affects the speed, reliability, and availability of data transfer. Issues with network performance can result in slow response times, data loss, and overall performance degradation.

Common network performance issues include high latency, packet loss, and jitter. Latency refers to the delay in data transmission, packet loss occurs when data packets are dropped during transmission, and jitter is the variation in latency. These issues can be caused by factors such as network congestion, hardware malfunctions, or incorrect network configurations.

Diagnosing and Troubleshooting Network Performance Problems

Diagnosing and troubleshooting network performance problems involves several techniques, including:

- Ping test:Measures the round-trip time and packet loss between two hosts.

- Traceroute:Traces the path taken by data packets from one host to another, identifying any bottlenecks or issues along the way.

- Packet capture:Captures and analyzes network traffic to identify specific issues, such as dropped packets or excessive latency.

- Performance monitoring tools:Continuously monitor network performance and provide insights into potential issues.

By utilizing these techniques, IT professionals can identify the root cause of network performance problems and implement appropriate solutions, such as optimizing network configurations, upgrading hardware, or resolving network congestion.

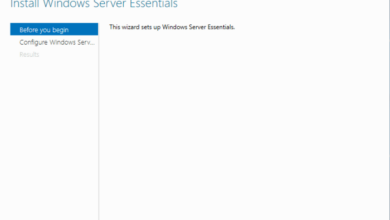

Addressing Cloud server performance issues can involve optimizing server design. Consider exploring Cloud diagram server design to understand how server architecture, network topology, and resource allocation can impact performance. By implementing efficient server designs, you can mitigate bottlenecks and improve the overall responsiveness and reliability of your cloud infrastructure.

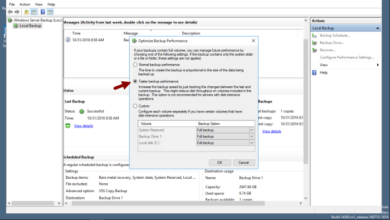

Storage Performance Evaluation

Storage performance plays a critical role in the overall performance of cloud servers. Different types of storage options are available, each with unique characteristics that impact performance.

Assessing storage performance involves evaluating metrics such as IOPS (Input/Output Operations Per Second) and latency. IOPS measures the number of read/write operations the storage can handle, while latency indicates the time it takes for an I/O request to be completed.

Storage Types and Performance Characteristics

- Block Storage:Stores data in fixed-size blocks, providing high IOPS and low latency for applications requiring fast access to small data blocks (e.g., databases, virtual machines).

- File Storage:Stores data in a hierarchical file system, offering high throughput for large file transfers and data analysis workloads.

- Object Storage:Stores data as immutable objects, providing low-cost, high-capacity storage for archival and backup purposes.

Storage Performance Optimization, Cloud server performance issues

Optimizing storage performance requires tailoring the storage solution to the specific workload requirements.

- Provisioning Adequate IOPS:Ensure the storage can handle the expected I/O load to avoid performance bottlenecks.

- Minimizing Latency:Use storage with low latency to reduce the time it takes for I/O requests to be completed.

- Choosing the Right Storage Type:Select the storage type that best suits the workload’s access patterns and performance requirements.

Scalability and Load Balancing

Scalability and load balancing are critical aspects of ensuring optimal cloud server performance. They enable servers to handle varying workloads effectively, ensuring consistent performance and high availability.

To design and implement effective scalability and load balancing strategies, it’s important to understand their importance and the techniques involved.

Load Balancing Algorithms

Load balancing algorithms distribute incoming requests across multiple servers to optimize resource utilization and improve response times. Some common algorithms include:

- Round Robin:Requests are distributed evenly to all available servers.

- Least Connections:Requests are sent to the server with the fewest active connections.

- Weighted Round Robin:Servers are assigned weights based on their capacity, and requests are distributed accordingly.

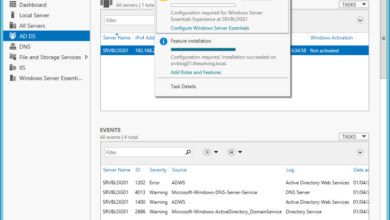

Monitoring and Alerting

Monitoring and alerting are crucial for proactive performance management of cloud servers. By continuously monitoring key performance indicators (KPIs), you can identify potential issues before they become significant problems. This allows you to take proactive steps to resolve issues and maintain optimal server performance.

KPIs to Monitor

Key performance indicators (KPIs) are metrics that provide insights into the performance of your cloud server. Some common KPIs to monitor include:

- CPU utilization

- Memory utilization

- Disk I/O utilization

- Network traffic

- Response times

Setting Thresholds

Once you have identified the KPIs to monitor, you need to set appropriate thresholds for each metric. These thresholds will determine when alerts are triggered. For example, you might set a threshold of 80% CPU utilization, which means that an alert will be triggered if the CPU utilization exceeds 80%.

Monitoring and Alerting Tools

There are a variety of monitoring and alerting tools available, both open source and commercial. Some popular options include:

- CloudWatch (AWS)

- Azure Monitor (Azure)

- Google Cloud Monitoring (GCP)

- Prometheus

- Nagios

These tools allow you to configure custom alerts based on the KPIs you are monitoring. When an alert is triggered, you will receive a notification via email, SMS, or other channels.By implementing a comprehensive monitoring and alerting system, you can stay on top of the performance of your cloud servers and take proactive steps to resolve issues before they impact your users.

Outcome Summary

By addressing cloud server performance issues, organizations can enhance the reliability, scalability, and overall user satisfaction of their digital platforms. The strategies Artikeld in this guide provide a roadmap for optimizing resource utilization, network performance, storage efficiency, and scalability. By implementing these best practices, businesses can ensure that their cloud servers deliver exceptional performance, enabling them to thrive in the competitive digital landscape.